October 22, 2020

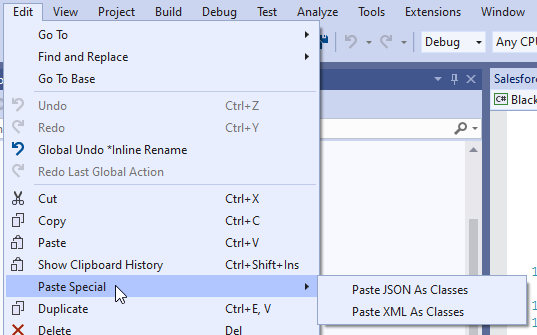

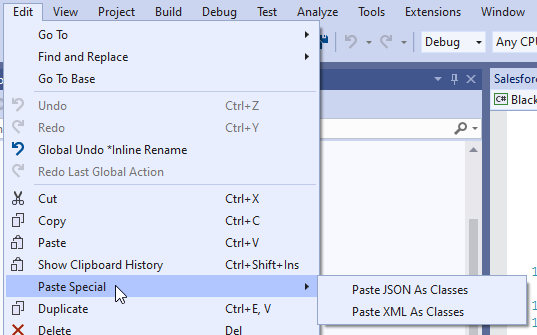

Just a little thing, that I learned today. Here is the usual example: someone gave you example json for some API, that you need to implement and first step would be – created to classes for this json. There is a nice feature in Visual Studio: copy json in clipboard, then you can Edit -> Paste Special-> Paste JSON as classes.

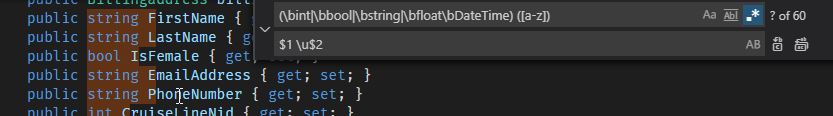

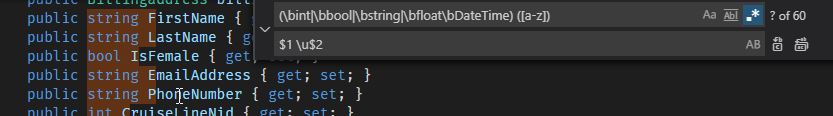

One problem for me was that all fields were lowercase. Fixing them manually was too boring, so I wrote regex to replace first letter to uppercase:

(\bint|\bbool|\bstring|\bfloat\bDateTime) ([a-z]) <- replace this

$1 \u$2 <- to this

No sure, that it will be useful for someone, I’m just proud of myself each time when I can use regex 🤓

tags:

September 1, 2020

..deployed this blog to azure web apps successfully. But it was a bumpy ride there, let me tell you.

how it was before

I have started this blog as a sandbox project and first things that I have learned in 2017 were: how to build docker image, push this image to repository and how to build dotnet core application. Therefore, every new version that I build, I started manually this scripts:

SERVICE="hysite"

pushd ./Hysite.Web

dotnet publish -c Release

popd

docker build -t "$SERVICE" .

SERVICE="hysite"

docker tag "$SERVICE" hyston/"$SERVICE":latest

docker push hyston/"$SERVICE":latest

And after that I have to ssh to linode virtual linux and run third script there:

docker stop $(docker ps -aq)

docker rm $(docker ps -aq)

docker rmi $(docker images -q)

docker run --rm -d -p 80:5000 -v /root/logs:/app/logs hyston/hysite:latest

I think everything here is self is self-explanatory and easy enough to be written by a person, who is started to looking ways to develop simple applications into the cloud. Deployment of new version was a cumbersome, but reliable path of running all three of these scripts together.

There were two things, that I didn’t like about this approach:

- This all totally can be automated. Run first two on

git pull into master and hook third one when new image was pushed into docker hub.

- Here I was using linux virtual machine, but with only reason - to run docker on it. On first versions, things were a bit more complicated (I have stored posts in shared with container folder), but now it is just the environment, that can run docker, with is, on my opinion, overkill.

Goal

What I was looking is service, that can take docker file, access to repository, restore, build, publish and create docker image, that will contain latest version, then deploy it into some cloud – and all of this should happen automatically, on new commit in master and without any manual steps. Ideally, it also should beforehand check, that tests are running, builds are building and notify me, if something is wrong.

Dead ends

Because I use only one image, I ignored all solutions, that have “Kubernetes” in name. Dunno, it just scared me a little, I was looking for a simple ways to start. I knew, that there are two major cloud operators: Azure and AWS and for my purposes I decided to stick with azure. They have some new service, called container instances, that is used exactly for what I need – take docker file, build image, run it and don’t ask any more questions.

Besides azure portal, MS have product “devops pipelines”, which purpose is still little unclear for me. I have created pipeline, that took repository, build my project and run it in container instance - but I met a problem.

All posts in this site stored in private GitHub repository and first thing, that hyston.blog is doing on start – is attempt to sign in into GitHub and load all posts. To do this, obviously, I need to store my GitHub credentials. When I build site on local machine, these username/password were stored in web.Production.config, which was gitignored. It is possible to add azure pipeline secrets, that would contain GitHub username and password and pass them into docker environment variables, but then they will be stored as linux environment variables, which is considered as insecure. There is other option to do this, that I’ll describe late, but by then I decided, that is a stop sign for me to use container instance. The other reason, that pushed me away from container instances was this comment in Stackoverflow:

Azure Web App for Containers is targeted at long running stuff (always running) while ACI are aimed at scheduled\burstable\short lived workloads (similar to Azure Functions).

So, despite having already running instance in ACI (without GitHub credentials, though), I was switched to use Azure web app. And the problem started to fall on my head.

Azure Web app

At first, I have decided created webapp with deployment directly code – so I should note care about docker at all. There is “deployment configuration” option in web app, that (if I remember correctly, it is using devops pipelines) should work as “wizard”. For me it refused to login into my GitHub account at first. After a day or two this issue has gone and I was able to create deploy my code directly in couple clicks.

But there appears another problem: there is no way to choose, which linux distribution will be used to deploy my code. For GitHub integration, my blog is using LibGit2Sharp, which in turn, using very specific linux git library, that is exist only on Ubuntu LTS. Some details about this issue can be found here. For docker image, I just add ‘-bionic’ to runtime dotnet image and then it runs in ubuntu. But for automatic deployment configuration base image was Debian and my site throwing an exception on trying to use libgit2sharp.

At this point, I was frustrated about Azure portal functionality overall and confused with Azure devops pipelines (as I understood, these are two different services, that use different accounts). So I went further into GitHub Actions.

Github actions

There are many ways and possibilities, that are opening, when you are using them. Simplest way is to build dotnet core app directly and deploy binary somewhere. Because I already added build layer to my docker file, I left compilation part there, although I dont like this solution now – wherever I deploy it now, it doesn’t use cache from previous deployments. It is possible to set my own machine as action environment, but I currently don’t have any computer that will run all the time.

Azure KeyVault

I’ll show my deployment script later, but before that I still have one unresolved problem: I do need GitHub credentials in runtime and I still don’t want to store them in open source configuration. Because I stick with azure, I decided to use azure vault. It is overloaded and cumbersome service (as everything with Microsoft), but it ended up well documented (probably, even overdocumented, because I have found several very similar tutorials) and with this tutorial I have added support for it in my code. When I run locally, it take azure credentials from my machine (because I have installed and logged in azure cli) and in docker I have added environment variables, that imported from arguments. This is more secure, than just storing plain credentials in environment, because with azure client id this can be retained only from azure web app.

And to pass them into dockerfile, I store them in GitHub secrets, which is inaccessible from view. So, to recap:

- Azure credentials (client id, tenant id and client secret) stored in GitHub secrets.

- When GitHub Action is running, build docker image with those secrets as arguments

- In Dockerfile, they are coverted into environment variables.

- When in code I create

Azure.Security.KeyVault.Secrets.SecretClient it take azure credentials from environment automatically

- With this client, I request my GitHub username/password from azure secret vault.

It may sound overcomplicated (that was stopped me from using it for a long time), but in hindsight this is most secure solution, as I can see.

Github actions (again)

To finish my GitHub action I needed only one step: deploy builded image. After all issues that I have seen trying to create devops pipelines, this was easy.

- At first, azure container repository should be created, where actual images are stored. At “access keys” tab I need to create admin user, that can be used for accessing images.

- Add step

azure/docker-login stop into GitHub action to login using these admin credentials

- After docker build, this image should be pushed to container registry.

- After image push is done, use

azure/webapps-deploy to do actual deploy. Here I need to store another GitHub secret: azure publish profile.

- Back to azure, I need to get permission to pull image from repository into webapp. This is well described here.

Happy end

And that’s basically it. It took me more than one hour to write this post about a thing, that took me almost a month to figure out . In the end it is just two scripts: Dockerfile, deploy.yaml created azure account and several settings in azure portal and GitHub. I hope, that in a year, when I will forget about all of that and would need to implement similar stuff, this blog post will help me to refresh my memory.

P.s. Have I mentioned, how I hate yaml indent-based structure? 🤯 This is good in theory and keep structure organised, but I have made too many errors during my development adventures.

tags:

June 19, 2020

disclaimer: here is example of refectoring, that is not optimised and well polished. With more experience I'll find better way to achieve what is written here. I have heavy feeling, this is the example of code, that I'll look back N years later with only one thought: "who, the ..., wrote this??"

However, I want to share this experience anyway to have a reference later.

Just imagine situation: you have 40+ classes with same interface and all of them have function with same signature and almost same body except one generic parameter, that is class type itself.

interface IBaseJob

{

void Run(IJobCancellationToken token);

}

public class ConcreteJob : IBaseJob

{

public void Run(IJobCancellationToken token)

{

/* implementation */

}

public Task Execute() =>

BackgroundJob.Enqueue<ConcreteJob>(job => job.Run(JobCancellationToken.Null));

}

I usually remove unnecesarry classes and types from examples, but these classes from Hangfire library would be important later.

Here I dont like Execute function, that is repeated in several dosen other classes with only small difference:

public Task Execute() => BackgroundJob.Enqueue<AnotherConcreteJob>(job => job.Run(JobCancellationToken.Null));

That sounds like a opportunity to move some functionality into base class. In C#8 it is possible to add interface default implementation, but we still use C# 7.3, so all I could do is to replace interface with abstract class.

public abstract class IBaseJob

{

public abstract void Run(IJobCancellationToken token);

public Task Execute(IJobExecutionContext context)

{

//@todo: call here BackgroundJob.Enqueue<ThisJobType>(job => job.Run(JobCancellationToken.Null));

}

}

...and all duplicated Execute functions from all jobs can be thrown away! 🥳 However, we still have a small problem here: How to call Enqueue method properly? We cannot call it directly, because we are not aware of class type in compile time, so we need to construct this function.

In computer science, reflection is the ability of a process to examine, introspect, and modify its own structure and behavior. Not to be confused with Self-Reflection (Philosophy).

Wikipedia

Well-experienced C# developer have access to types, methods and all stuff, that was used to write code, that he is currently writing 🤪 Start with type Type, that describes type. Enqueue is a static function from BackgroundJob, therefore we need this type and get list of methods, that it supports:

typeof(BackgroundJob) // return Type in runtime

.GetMethods() // return list of available methods

At first I thought just to get method by name (.GetMethod("Enqueue")), but there are 4 methods with same name and friendly exception kindly asked me to be less ambiguously. This is a common problem with reflection code: you usually doesnt know if something is wrong in compile time, all errors show itself only when you actually run the code.

So, method, that I needed, is 1) generic and 2) have Expression<Action<T>> as argument. What is an Expression and why they need Action I will explain later.

GetMethod return MethodInfo, class, that describes all information about concrete method. Then we need to call GetParameters to, well, get method parameters. I'll probably better explain it by adding comments into code:

var enqueueMethod = typeof(BackgroundJob)

.GetMethods() // We have all BackgroundJob methods here

.Where(m =>

m.Name == "Enqueue" &&

m.IsGenericMethod &&

m.GetParameters().Length == 1) // Filter out only generic, with proper name and one parameter

.Select(m => new

{

method = m,

paramType = m.GetParameters().Single().ParameterType

}) // We need to have a paramter type for further investigation and same MethodInfo in tuple to return it later

.Where(p =>

p.paramType.GetGenericTypeDefinition() == typeof(Expression<>) && // Generic type of argument should be 'Expression<>'

p.paramType.GetGenericArguments().Length == 1 &&

p.paramType.GetGenericArguments().First().GetGenericTypeDefinition() == typeof(Action<>)) // We need to go deeper and find generic arguments from generic arguments 🧐

.Select(p => p.method) // If single parameter has type Expression<Action<>>, we are good

.Single() // An I'm sure, that there is only one of them. However, that's a bad practice, if someone update Hangfire version and there would be another method, that will pass by this criteria

And now we have MethodInfo about what we need to call!

Can we can call it? No, not yet.

Remember that 'Action<T>' ? We need to place current job type there. Type can be found easily with GetType(). Difference with typeof is that later is used in runtime, unlike that GetType() user can place current type in compile type, that is what we needed. And then we construct proper method using MakeGenericMethod

var concreteJobType = GetType();

enqueueMethod = enqueueMethod.MakeGenericMethod(concreteJobType);

Now we are talking!

But we still have one small detail - Enqueue method is not just genetic, it take generic argument. And this argument is itself a Expression that take Action as a generic argument, which broke my brain for a second, when I was trying to figure it out.🤯

An expression is a sequence of one or more operands and zero or more operators that can be evaluated to a single value, object, method, or namespace.

Microsoft docs

This haven't done anything clearer for me. Expression is a smallest part of code, that can be described: variable, constant, function or methods argument. Let's say we have code line a + 3. We can represent it as expression, where a would be Expression.Variable, then 3 would be Expression.Constant and whole line would be Expression.Add with previous two as a parameters. Lambda itself can be presented as a Expression too. And now we need to build expression, that represent code job => job.Run(JobCancellationToken.Null) where job would be current type.

var cancellationTokenArgument = Expression.Constant(JobCancellationToken.Null, typeof(IJobCancellationToken));

var jobParameter = Expression.Parameter(concreteJobType, "job");

var runMethodInfo = concreteJobType.GetMethod("Run");

var callExpression = Expression.Call(jobParameter, runMethodInfo, cancellationTokenArgument);

var lambda = Expression.Lambda(callExpression, jobParameter);

So, finaly, we got everything: method, that we need to call and parameters to call it. We can do magic with MethodInfo.Invoke:

object[] args = { lambda };

enqueueMethod.Invoke(null, args);

Couple notes to this code:

Enqueue method is static, therefore first argument to Invoke is null. Otherwise it should be a object, which method is invoked.- you cant put parameter directly, you need to make array from it. Would be a good opportunity to use variadic arguments with

params, but it is what it is.

- don't forget to wrap it in

try/case here or catch exceptions somewhere else. As I wrote above, using reflections mean you will get more errors in runtime instead of by compilation.

Whas it all worth it?

So, now we have one function in base class instead of many (many many) small functions in dosens of files. Does code became clearer? I doubt so. Does it faster? Definitly not . We just followed DRY principle and removed duplicated code. I guess, most valulable benefit, that we gain is the experience in writing code, that works with Reflections and Expressions. In writing day-to-day buiseness logic I not often have a chance to use them 😋

We have another example for using reflections in our project: every time someone add new field to class, that represents possible user rights, startup tool make changes to database to add new fields in users table. Otherwise, Reflections and Expressions are used in more common system code like Linq, Automapper etc.

tags:

June 3, 2020

⌨️

Because of unusual circumstances, regular day I spend on windows laptop before lunch and on macbook after. Both of them in clamshell mode, with closing lids and connected to same monitor, that I brought from work.

For mac I used magic keyboard for last several years, but my hands refuse to type on apple keyboard in windows. Even after I remapped in win10 using SharpKey ⌘ key to ctrl, and ⌥ to alt, it feels clumsy and inappropriate. So I started looking for new keyboard. My requirements were simple:

- no numpad area to reduse size

- Full size arrow keys in inverted T shape with gap around them

- Home, End page up/down key on their original places

- Wireless with usb-c charging

- Separate play/pause and volume media keys (or possibility to use them with fn)

- cool looking color and, ideally, mechanical, but with quiet switches.

- under 100€. Actually, in current situation, as cheap, as possible, but still I didnt want to buy crap and suffer for next several years using it.

I haven't found many options. Actually, Keychron K1 was the only one that met every criteria. I'm sure, that there are more, but thats the one that I get and I'm happy about it. And I kinda get all this buzz about mechanical keyboards, it is really nice feel to type on, even on such small profile. I even thought about connecting it to the mac, but for me this is already a windows keyboard. It has switch mac/win, but I just cant press "⌥ + Q" on keyboard, where are used to press "alt + F4" and vice versa.

There are some minor throwbacks, too. Rgb lights are uneven and gimmicky (by my opinion). Keyboard has a dosen types of keys light, but I have found only two of them useful and not distracting. Sometimes key switches (I used red) are too sensible and key started being detected even if I just lay hand on keyboard.

🎧

My other purchase was motivated by my son. After he dropped my beloved Bose QC25, their right ear speaker stopped working. I always said, that this was the best purchase in my professional career – these headphones helped me survive in noisy offices for several years. Even I'm not in office anymore, I simply cannot concentrate on code without closed headphones on my head, ideally, with noise cancellation. After a week without headphones at home, I got sick from kids cries with TV in next room and ordered Bose 700 (they have full name, but I don't care). And this is the best thing, that I have done to improve my productivity during quarantine. When I wear them, I block myself from other world and concentrate on my tasks. I'm not impressed by sound quality (even QC25, AirPods and cheap JBL plugs sound better, by my opinion), but bose 700 are the most comfortable and block completely surrounding sounds. When I turn them off, I have a feeling, that I'm on plane, that is taking off - suddenly I hear roaring ventilators from both laptops. And it is possible to connect these headphones to two devices, usually for me they are connected to iPhone and XPS15. There are one issue, related to that: if one device start playing sound (doesn't matter, which one), the another device became muted (without pausing playback). As example, when I listen to video or music on laptop and quickly check phone, then sound of locking phone mute sound on laptop until I'll click stop/play again. Minor issue, but annoying. With pair iPhone/mac volume is also muted, but audio switches back very quickly.

Last purchase, that made home life considerably nicer - JBL Flip 5. Now I can listen relaxing jazz with my family or some cheerful hip-hop/rock while cooking. 🎸

tags: gadgets

May 22, 2020

I finally added info about myself and rss feed!

tags:

← Next

Previous →

© Copyright 2026 version: f10d173

100% JS-free