February 14, 2022

I’m writing on C# for 5 years now and keep finding things, that are not widely used, but they are exist already for a long time. Just recently I found the need of dynamic keyword.

Usually new variables are defined with var or with value type directly:

Foo foo = new Foo();

var foo = new Foo();

These are actually two identical lines, compiler would define actual type on second lane from right-hand operator.

But what if type is unknown in compile type? I have met a problem, where actual type is created by external parameter - and there is no common interface that can be used to aggregate possible types. In these uncommon scenarios dynamic can be very handy.

Suppose, we have a three types:

public record TypeA(string A);

public record TypeB(string B);

public record TypeC(string C);

Then we can declare a function, which return any of this types by random:

dynamic GetRandomType(Random random) => random.Next(3) switch

{

0 => new TypeA("a"),

1 => new TypeB("b"),

2 => new TypeC("c"),

_ => throw new Exception("out of random range")

};

By making dynamic return type, we tell a compiler, that he should not care, which type it actually is. Problem here in that compiler agreed and skip any further compile-time checking:

var random = new Random();

var result = GetRandomType(random);

result.DoSomething(); // We would get runtime error here

A good thing is, that actual type is available in run time and overloading works even with dynamic type:

public class Foo

{

public static void Do(TypeA obj) => Console.WriteLine($"Do a: {obj.A}");

public static void Do(TypeB obj) => Console.WriteLine($"Do b: {obj.B}");

public static void Do(TypeC obj) => Console.WriteLine($"Do c: {obj.C}");

}

Foo.Do(result); // correct function would be called, based on result type

This can be very handful for places, where actual behaviour is unknown beforehand. As example, I wrote a event parser, where each event contain a enum with event type and object payload. And for each actual event the payload would be a completely different DTO, as well as event handlers would have completely different behaviour.

tags: csharp, dev

January 3, 2022

Most of current frontend frameworks are build upon making virtual DOM. All UI changes are applied to this structure, then it is getting compared to real DOM and only then framework adjust actual page. Just before Christmas, when there were not a lot of stuff to do, one collegue mentioned to me Svelte framework, that use different approach and I decided to give it a try.

TLDR: using virtual DOM is slow; All changes can be made manually, and code can be generated in compile-time. Svelte moves complexity from client browser realtime into dev machine compile time.

All code is written in html-like files with .svelte extension. On building (svelte-kit build) all code in <script> get compiled into javascript, <style> goes into css. I have not made any real comparisons with big projects, but for small hello worlds it loads really fast and generates a lot of "compiled" javascript code that became unreadable really fast.

For me, actually, the biggest benefit was a flat learning curve. This is component-based framework, that is really start with. I'm using react lately and I find that there are too much conventional boilerplate code. For example, here is hello-click-me-world example in svelte:

<script>

let count = 0;

function handleClick() {

count += 1;

}

</script>

<button on:click={handleClick}>

Clicked {count} {count === 1 ? 'time' : 'times'}

</button>

Html can be enchanced with conditional statements and loops:

{#each values as value}

<li>{value}</li>

{/each}

Input elements can be binded by attributes bind:value. Even regular variables can be "reactified" by adding $: before declaration.

<script>

let count = 0;

$: double = count * 2;

</script>

<button on:click={e => count += 1}>Click</button>

<p>{count}</p>

<p>{double}</p>

In this example after clicking button second label would not be updated without $: statement. As I understood, first label is getting updated anyway because it is already used in handler.

DOM gets updated on each assignment operator, not by anything else. So, if you need to update array, then use spread syntax:

values = [...values, { name: newName }];

I think it is a nice framework to play around with, very easy to start, however, it's conventions were broken by moving to each major version and it's unclear (as with every new js-framework) how popular would it be and how many support will it get. May be it can be used for small dev-related project, where react or angular would be overkill.

And for my purposes I'll continue to explore react in my free time, since this seems to be the most popular frontend. Although typescript is very painfull and unfriendly after years of writing in C#.1

tags:

June 7, 2021

I was an apple fan for the last 5+ years and I’m always eager to watch their presentations. They always have marketing-driven demos, but in this year they outbullshitened themselves.

Over the many California style stages and inspiring fake smiles it’s getting harder than ever to see actual features, that will help me personally in everyday life:

- Notification profiles and, especially, autoanswer in iMessage, that user is in focus mode.

- Tab groups in safari. Instead of several windows use group tabs - that can save screenspace on small laptop screen and also focus on one topic.

• ‘async/await’ in Swift is long overdue. I didn’t wrote in Swift for the last 5 months, but I may come back one day and it would be nice to see these features, that were implemented in C# a decade ago.

That’s actually all news that I really care about. iPad mulitasking and Playgrounds enchantments looks promising, but I am not active iPad user. Not every wwdc should be amazing (like last year was with widgets, app library and silicone macs), today is a quiet one. With is good, I just wish that presentation were more actually inspiring and less from pinkish kindergarten world.

tags: apple

June 6, 2021

It is beginning of June and it means, that time for first post of the year has become.

Enum flags are useful wrapper around bitmasks and can be used to store several flags into one field/property. Internally they are just integers where each bit represent a flag state and can be set on or off.

For example, lets define what next weekend should look like by writing down possible plans:

[Flags]

public enum WeekendState

{

NONE = 0,

SEX = 1 << 0,

DRUGS = 1 << 1,

ROCK = 1 << 2,

ROLL = 1 << 3,

DONUTS = 1 << 4

}

Here each state have their own 1 bit in place and can be combined with every other one. By adding Flags attribute we tell .net to treat this enum as a bitset.

And let’s make simple controller, that will predict our (hardcoded) future:

[ApiController]

[Route("[controller]")]

public class WeekendController : ControllerBase

{

[HttpGet]

public WeekendState Get() =>

WeekendState.DRUGS | WeekendState.DONUTS;

}

If we call this controller we will get 18. Donuts is 10000 aka 16, Drugs is 10 aka 2.

2 + 16 = 18, easy. But if you don’t see backend code it can be not so easy. I’m asking second main question of the universe - what should I do on weekend and I’m getting 18? What doesn’t this mean?

We need to serialise enum flags as strings. Easiest way to do that - add json serialised options in Startup.

services

.AddControllers()

.AddJsonOptions(options =>

options

.JsonSerializerOptions

.Converters.Add(new JsonStringEnumConverter())

);

Here JsonStringEnumConverter is default way of enum serialization. After these changes our little endpoint will return “DRUGS, DONUTS” which is an improvement. But frontend (or whoever will use api) may not like string with separated with comma values. The most logical way would be to return the array. To do that we need to write our own JsonConverter.

public class FlagJsonConverter<T> : JsonConverter<T> where T : struct, Enum

{

public override T Read(ref Utf8JsonReader reader, Type typeToConvert, JsonSerializerOptions options)

{

//TODO

}

public override void Write(Utf8JsonWriter writer, T value, JsonSerializerOptions options)

{

//TODO

}

}

And use it by default for type WeekendState :

services

.AddControllers()

.AddJsonOptions(options =>

{

options

.JsonSerializerOptions

.Converters.Add(new FlagJsonConverter<WeekendState>());

options

.JsonSerializerOptions

.Converters.Add(new JsonStringEnumConverter());

});

Note: Order here is important, first passable converter will be used.

First method in our new converter is Read, which takes values from Utf8JsonReader and does everything possible to convert them into required enum (converter class is generic and can be used for all enums).

var derserialised = JsonSerializer.Deserialize<string[]>(ref reader);

var textValue = string.Join(',', derserialised ?? new string[0]);

return Enum.TryParse<T>(textValue, ignoreCase: true, out T result)

? result

: (T)Enum.Parse(typeToConvert, "0");

}

Input string for that is parsed json token, which we convert to string array (if possible) and then join back as comma-separated strings. May be there are more elegant solution, but this was a fastest to code.

And then this string is parsed using default parser. If failed - it tries to parse zero value, which lead to simplest result (WeekendState.None).

Another function is Write:

writer?.WriteStartArray();

var values = Enum.GetValues<T>().Where(e => value.HasFlag(e));

foreach (var val in values)

writer.WriteStringValue(val.ToString());

writer?.WriteEndArray();

}

This is taking existing value, iterate it into all assigned flags and convert them into strings. And, on the way wrap it into array.

And right now result of our beautiful endpoint is:

That was exactly what I need.

And for final step here are xunit tests for deserialisation:

[Theory]

[InlineData("[\"DONUTS\"]", WeekendState.DONUTS)]

[InlineData("[\"ROCK\", \"ROLL\"]", WeekendState.ROCK|WeekendState.ROLL)]

[InlineData("[]", WeekendState.NONE)]

public void TestDeserialisation(string input, WeekendState result)

{

var utf8JsonReader = new Utf8JsonReader(Encoding.UTF8.GetBytes(input), false, new JsonReaderState(new JsonReaderOptions()));

var converter = new FlagJsonConverter<WeekendState>();

var value = converter.Read(ref utf8JsonReader, typeof(WeekendState), new JsonSerializerOptions());

Assert.Equal(value, result);

}

Bonus: spending each weekend eating donuts and drugs can be tedious. Let’s update controller code:

[HttpGet]

public WeekendState Get()

{

Random rnd = new Random();

var shuffled = Enum.GetValues<WeekendState>().OrderBy(c => rnd.Next());

var values = shuffled.Take(rnd.Next(shuffled.Count()));

var result = WeekendState.NONE;

foreach (var val in values)

result |= val;

return result;

}

It always contain random amount of random items. It also always include NONE value, which is not a proper solution.

tags: csharp, dev

December 11, 2020

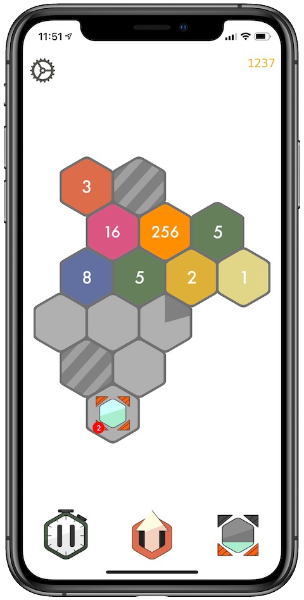

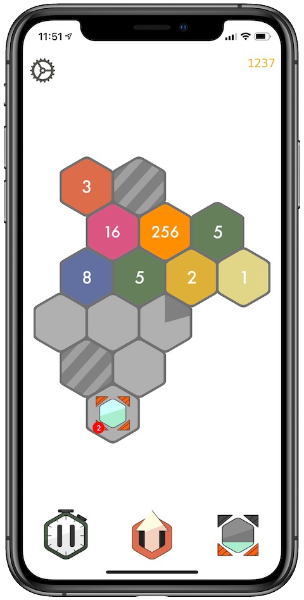

Today I'm releasing my first app for iOS, that I have developed: HexThrees!. This is a small puzzle game, that was inspired greatly by 2048 and Threes!. I have played 2048 for a long time in the past and one day a question came into my mind: what would happen, if a game field would be a hexagon? Overall, hexagons are Bestagones! This ideas were collided with fibonacci numbers, that we have used in kanban planning weekly at work.

I wanted to create simple, easy mind game, that can keep my hands busy, when I listen to podcast or talk by phone. Merging rules might look overcomplicated at first glance, but after a while I found them much more interesting and fun to play with comparing to 2048 and Threes!

Although initial commit was made in april 2018, there were big gaps in development due to other projects, family, my real job and lazyness. Most of the game logic, graphic effects and base functionality was written in a train during commute between small town where I live and Hamburg where I work. But 2020 finally gave me opportunity to finish this game, polish it (as far as time allows me) and publish.

And this last mile was especially rough. I knew, that last percent takes more time than any other percent, but this feels especially frustrating because I kept promising to myself to get rid of this project as fast as I could and not waste more time on a hobby, that will not bring any amount of money. But still, the least stuff was left to do, the more it seems it took time. Iap integration, l10n (only english and russian), stupid bug in tutorial, screenshots for all sizes (hello, hardcoded save!), preview video (hello, ffmpeg!), first app store rejection and more small issues. Tasks, that I estimated for couple hours, required a day, and when I though, that I can deal with all in a couple days, it actually took a couple weeks.

But now it's over. All I left to do is to finish this post, push "publish" button, write some messages for reddit and relay.fm discord, where someone might be interested in that kind of game, publish instagram post and relax for a while. Hopefully, there would be no significant bugs.

This is a weird year. I (kinda) lost my main job, run 5k for the first time in summer, then 15k a month ago, which would be totally insane for me a year ago, and now this. I was writing small game prototypes since 2012 and always wanted to release something for real. Today is the day.

tags:

← Next

Previous →

© Copyright 2026 version: f10d173

100% JS-free